Sharing a dictionary between processes on python

As you might know Python has a problem called

as GIL which means global interpreter lock. This lock prevents sharing variables between processes. Basically process creates another interpreted processs which also means double the memory, and process power.

As you might know Python has a problem called

as GIL which means global interpreter lock. This lock prevents sharing variables between processes. Basically process creates another interpreted processs which also means double the memory, and process power.

To overcome this problem, guys at python created a solution called Manager() not only you can share data between processes, you can share data between computers

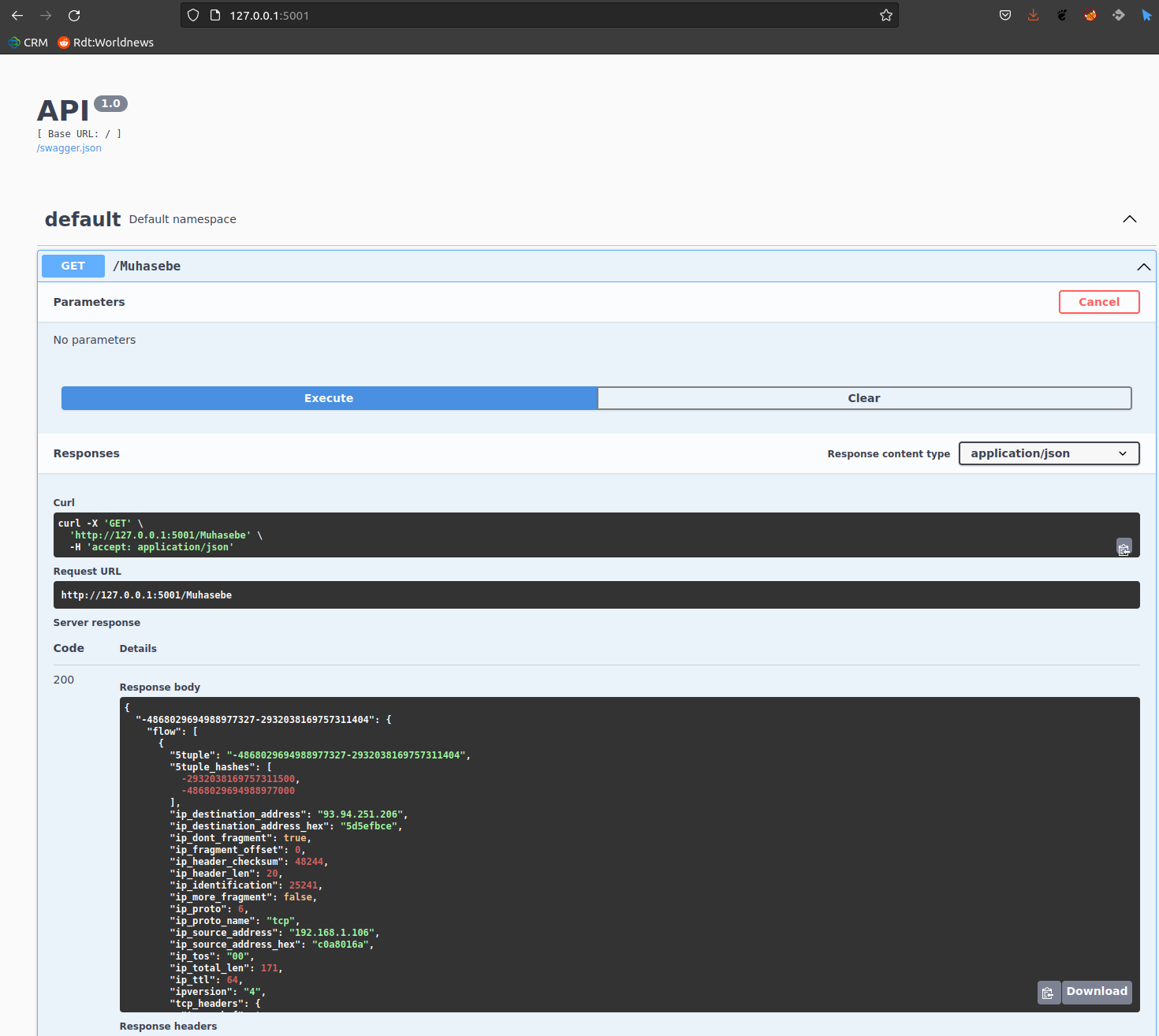

On my case, i needed to update a dictionary on one process and a flask api was going to serve requests based on this dictionary

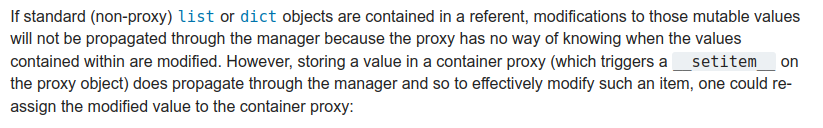

However there was a problem, when i needed to update nested dictionaries on process i saw that dict was never updated, and look's like the Manager() class has a bug which doesn't update the values on dictionaries. Quoting from python "https://docs.python.org/3/library/multiprocessing.html#proxy-objects"

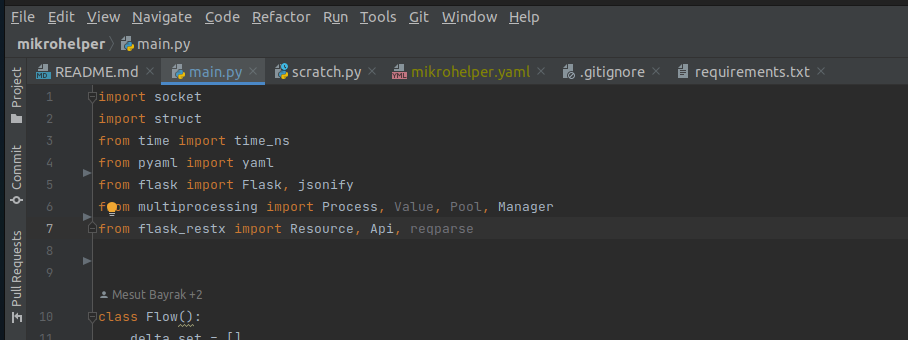

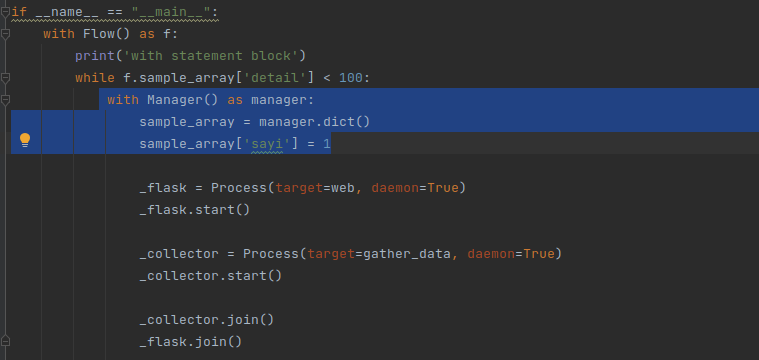

The manager is initiated like this ;

here is an example to update the manager() owned dictionary ;

class Flow():

def __init__(self):

print('init method called')

self.sample_array = {}

def __enter__(self):

print('enter method called')

self.sample_array['detail']=0

return self

def increase_detail(self,count):

self.sample_array['detail'] += count

def __exit__(self, exc_type, exc_val, exc_tb):

print("exited")

print(self.sample_array)

# def __exit__(self, exc_type, exc_value, exc_traceback):

# print('exit method called')

the increase_details() function represents a stream to update the sample_array['detail'] by 1 on every interval, how ever this wasn't happening and i couldn't find any legitimate solution to this.

What i did was to create a copy of the array, do the nested updates on new array and copy the whole array back to manager. Like this;

def increase_detail(self,count):

# self.sample_array['detail'] += count

_sa_array= self.sample_array

_sa_array['detail'] += count

self.sample_array=_sa_array

Lame but solved my problem, i'd like to know possible solutions to this problem, if you have one mail me mailto mesut